Forty-one percent of voice assistant users are concerned about trust, privacy and passive listening, according to a new report from Microsoft focused on consumer adoption of voice and digital assistants. And perhaps people should be concerned — all the major voice assistants, including those from Google, Amazon, Apple and Samsung as well as Microsoft, employ humans who review the voice data collected from end users.

But people didn’t seem to know that was the case. So when Bloomberg recently reported on the global team at Amazon who reviews audio clips from commands spoken to Alexa, some backlash occurred. In addition to the discovery that our A.I. helpers also have a human connection, there were concerns over the type of data the Amazon employees and contractors were hearing — criminal activity and even assaults in a few cases, as well as the otherwise odd, funny or embarrassing things the smart speakers picked up.

Today, Bloomberg again delves into the potential user privacy violations by Amazon’s Alexa team.

The report said the team auditing Alexa commands has had access to location data and, in some cases, can find a customer’s home address. This is because the team has access to the latitude and longitude coordinates associated with a voice clip, which can be easily pasted into Google Maps to tie the clip to where it came from. Bloomberg said it wasn’t clear how many people had access to the system where the location information was stored.

This is precisely the kind of privacy violation that could impact user trust in the popular Echo speakers and other Alexa devices — and, by extent, other voice assistant platforms.

While some users may not have realized the extent of human involvement on Alexa’s backend, Microsoft’s study indicates an overall wariness around the potential for privacy violations and abuse of trust that could occur on these digital assistant platforms.

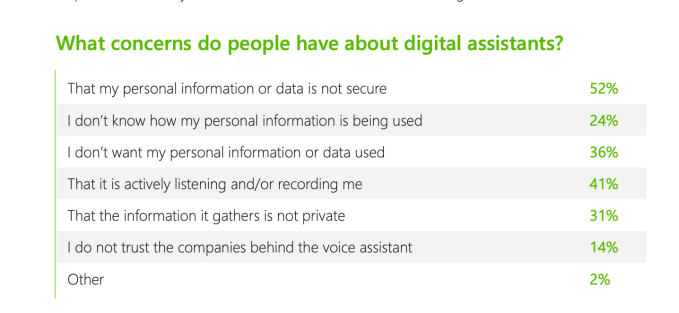

For example, 52 percent of those surveyed by Microsoft said they worried their personal information or data was not secure, and 24 percent said they don’t know how it’s being used. 36 percent said they didn’t even want their personal information or data to be used at all.

These numbers indicate that the assistant platforms should offer all users the ability to easily and permanently opt out of the data collection practices — one click to say that their voice recording and private information will go nowhere, and will never be seen.

41 percent of people also worried their voice assistant was actively listening or recording them, and 31 percent believed the information the assistant collected from them was not private.

14 percent also said they didn’t trust the companies behind the voice assistant — meaning Amazon, Google and all the others.

“The onus is now on tech builders to respond, incorporate feedback and start building a foundation of trust,” the report warns. “It is up to today’s tech builders to create a secure conversational landscape where consumers feel safe.”

Though the study indicates people have worries about their personal information, it doesn’t necessarily mean people want to entirely shut off access to that data — some may want to offer their email and home address so Amazon can ship an item to their home, when they order it by voice, for instance. Other people may even opt into sharing more information if offered a tangible reward of some kind, the report also notes.

Despite all these worries, people largely said they performed using voice instead of keyboards and touch screens. Even at this early stage, 57 percent said they would rather speak to a digital assistant; and 34 percent say they like to both type and speak, as needed.

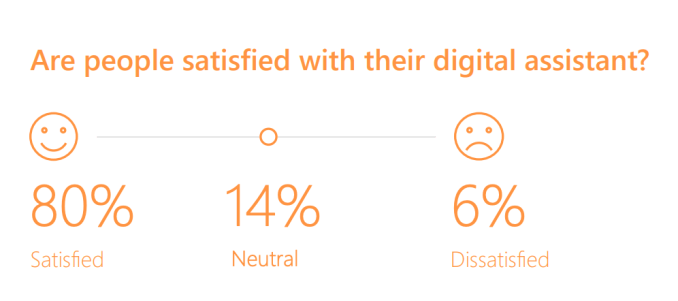

A majority — 80 percent — said they were “somewhat” or “very” satisfied with their digital assistants. Over 66 percent said they used digital assistants weekly, and 19 percent used them daily. (This refers to not just voice, but any digital assistant, we should note).

These high satisfaction numbers mean digital and voice assistants are not likely going away, but the mistrust issues and potential for abuse could lead consumers to decrease their use — or even switch brands to one that offered more security in time.

Imagine, for example, if Amazon et al. failed to clamp down on employee access to data, as Apple launched a mass market voice device for the home, similar in functionality and pricing to a Google Home mini or Echo Dot. That could shift the voice landscape further down the road.

The full report, which also examines voice trends and adoption rates, is here.