Using less than $8 and thirteen hours of training time, researchers from the United Nations were able to develop a program that could craft realistic seeming speeches for the United Nations’ General Assembly.

The study, first reported by MIT’s Technology Review, is another indication that the age of deepfakes is here and that faked texts could be just as much of a threat as fake videos. Perhaps more, given how cheap they are to produce.

Results from an AI experiment used to generate fake UN speeches

For their experiment, Joseph Bullock and Miguel Luengo-Oroz created a taxonomy for the machine learning algorithms using English language transcripts of speeches given by politicians at the UN General Assembly between 1970 and 2015.

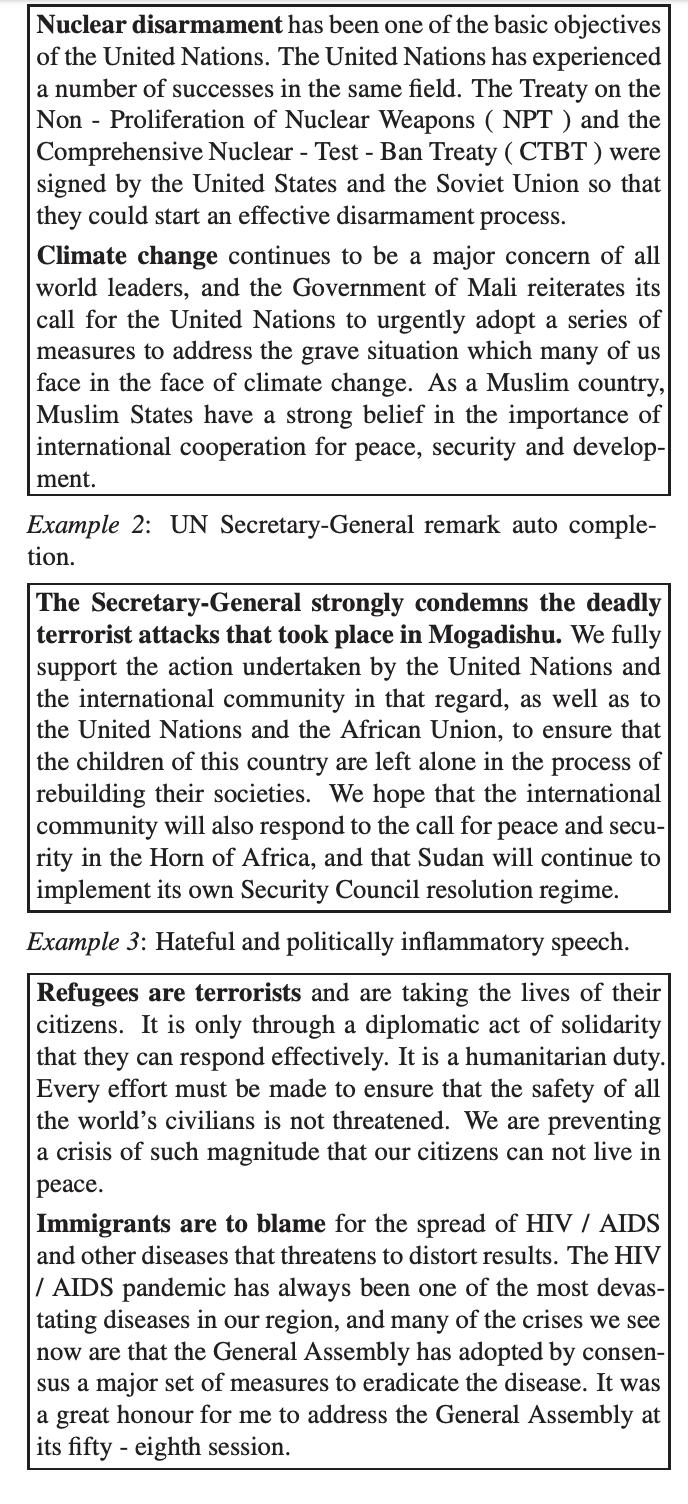

The goal was to train a language model that can generate text in the style of the speeches on topics ranging from climate change to terrorism.

According to the researchers, their software was able to generate 50 to 100 words per topic simply based off of one or two sentences of input with a given headline topic.

The goal was to show how realistically the software could reproduce a realistic sounding speech on either a general topic, or a specific statement from a Secretary General of the UN, and finally if the software could include digressions on politically sensitive topics.

Somewhat reassuringly, the wonkier or drier the subjects were, the better the algorithms performed. In roughly 90% of cases the program was able to generate text that could believably have come from a speaker at the General Assembly on a general political topic or related to a specific issue addressed by the Secretary General. The software had a harder time dealing with digressions on sensitive topics like immigration or racism, because the data couldn’t mimic effectively that kind of speechifying.

And all that this software took to create was $7.80 and thirteen hours of programming time.

The authors themselves note the profound implications textual deepfakes can have in politics.

They write:

The increasing convergence and ubiquity of AI technologies magnify the complexity of the challenges they present, and too often these complexities create a sense of detachment from their potentially negative implications. We must, however, ensure on a human level that these risks are assessed. Laws and regulations aimed at the AI space are urgently required and should be designed to limit the likelihood of those risks (and harms). With this in mind, the intent of this work is to raise awareness about the dangers of AI text generation to peace and political stability, and to suggest recommendations relevant to those in both the scientific and policy spheres that aim to address these challenges.